april

Active Perception and Robot Interactive Learning

The Active Perception and Robot Interactive Learning (APRIL) laboratory focuses on the co-evolution of artificial intelligence and robotic technologies to drive breakthrough research to enable robots to perform complex tasks in real world such as manufacturing, logistics, healthcare, agri-food, and more. Robots should be able to learn new skills by interacting with humans and perceiving the environments using modern robot vision and learning techniques.

The overall research theme involves the creation of various robot perception and manipulation systems to augment robot capabilities of working in complex and dynamical environments. This includes research in areas such as robot active perception, robot learning and manipulation, robot deep reinforcement learning, robot sim2real learning, as well as development of novel robot mobile manipulation systems including smart end-effectors, sensing modules.

Laboratories

The APRIL Laboratory is part of the Advanced Robotics Department and counts with a range of state-of-the-art equipment that support our research and stimulate the curiosity of our researchers. The equipment includes customized robot mobile manipulators, Franka Emika robot arm, Kinova GEN3 robot arm, Schunk robot arm, robot grippers, dexterous robot hands, F/T sensors, RGB and depth cameras, server with high-performance GPU farms, etc. The researchers come from multi-disciplinary backgrounds, including computer science, robotics, mechatronics, electronics.

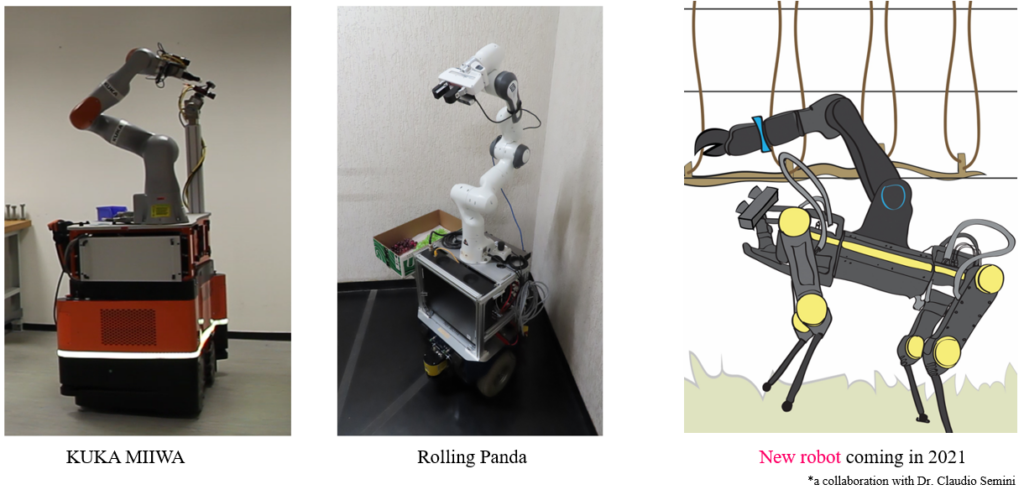

The following pictures show the mobile manipulators the APRIL Lab used in the past (KUKA MIIWA), is using at the moment (Rolling Panda) and will use in future (a new quadrupped mobile manipulator).

Research Topics

1. Learning control for robot dexterous manipulation tasks

2. Sim2Real deep reinforcement learning for robot manipulation

3. Objects detection, segmentation and tracking for manipulation

4. Action-perception coupled whole-body control for mobile manipulator

5. One-shot/Few-shot learning for robot grasping and picking

Projects

AutoMAP (2015-2017) (EU FP7 EUROC AutoMAP) is the short name for “Autonomous Mobile Manipulation” addressing applications of robotic mobile manipulation in unstructured environments as found at CERN. This project is based on use case operations to be carried out on CERN’s flagship accelerator, the Large Hadron Collider. The main objective is to carry out the maintenance work using a remotely controlled robot mobile manipulator to reduce maintenance personnel exposure to hazards in the LHC tunnels – such as ionising radiation and oxygen deficiency hazards. A second goal is to allow the robot being able to autonomously carry out the same tasks in the assembly facility as in the tunnel on collimators during their initial build and quality assurance through the robot learning technologies. Click for more details

VINUM (2018-2023) (Italian Project) is the short name for “Grape Vine Recognition, Manipulation and Winter Pruning Automation“. The objective is to apply the state-of-the-art mobile manipulation platforms and systems, a wheeled mobile platform with a commercial full torque sensing arm and multiple sensors, and an under-develop quadruped robot mobile platform with a customized robotic arm and multiple sensors (collaboration with Dr. Claudio Semini) for various maintenance and automation work in the vineyard, e.g., pruning, inspecting to tackle the shortage of skilled workers. Together with project, VINUM is targeting at providing very robust solutions for outdoor application to deal with all kinds of natural objects. There are two activities carried out under the project using our developed mobile manipulators:

LEARN-REAL (2019-2022) (EU H2020 Chist-Era Learn-Real) is the short name for “Learning Physical Manipulation Skills with Simulators Using Realistic Variations“. It proposes an innovative toolset comprising: i) a simulator with realistic rendering of variations allowing the creation of datasets and the evaluation of algorithms in new situations; ii) a virtual-reality interface to interact with the robots within their virtual environments, to teach robots object manipulation skills in multiple configurations of the environment; and iii) a web-based infrastructure for principled, reproducible and transparent benchmarking of learning algorithms for object recognition and manipulation by robots. Strong AI oriented technologies (Deep Reinforcement Learning, Deep Learning, Sim2Real transfer learning) are our main concerns in this project. Click for more details

LEARN-ASSIST (2021-2023) (Italy-Japan Government Cooperation in Science and Technology) is the short name for ”Assistive Robotic System for Various Dressing Tasks through Robot Learning by Demonstration via Sim-to-Real Methods“. The objective is to develop an AI empowered general purpose robotic system for dexterous manipulation of complex and unknown objects in rapidly changing, dynamic and unpredictable real-world environments. This will be achieved through intuitive embodied robotic demonstration between the human operator enhanced with a motion tracking device and the robot controller empowered with AI-based vision and learning skills. The privileged use case of such a system is assistance for patients or elders with limited physical ability in their daily life object manipulation tasks, e.g., dressing of various clothes. (project website coming soon)

PhD positions starting in Autumn 2021 on topics under ongoing projects are open for application. Those interested please contact Prof. Fei Chen.

People

Leader: Prof. Fei Chen (contact: fei.chen -at- iit.it)

PhD Student (D3): Sunny Katyara (enrolled in UniNa, co-supervision with Prof. Fanny Ficuciello, Prof. Bruno Siciliano, on Learn-Real Project)

PhD Student (D2): Carlo Rizzardo (enrolled in UniGe, co-supervision with Prof. Darwin Caldwell, on Learn-Real Project)

PhD Student (D1): Miguel Fernandes (enrolled in UniGe, co-supervision with Prof. Darwin Caldwell, on VINUM project)

PhD Student (D2): Tao Teng (enrolled in Unicatt, co-supervision with Dr. Claudio Semini, Prof. Matteo Gatti, on VINUM project)

Fellow: Antonello Scaldaferri

Partners and Collaborations

We welcome all kinds of collaborations to speed-up the development of intelligent robots addressing real world applications. Therefore, we are always open to new and challenging opportunities to collaborate with other groups and institutions. We are also open to interested students and scholars who want to spend some short time for visiting or longer time for PhD or Post-doc in the group.

In the past years, we have collaborated in the robotics area with:

German Aerospace Center (Germany)

European Nuclear Research (Switzerland)

Prisma Lab @ Università degli Studi di Napoli Federico II (Italy)

Robot Learning & Interaction Group @ Idiap research institute (Switzerland)

Department of Sustainable Crop Production @ Università Cattolica del Sacro Cuore (Italy)

Robotics Institute @ Shenzhen Academy of Aerospace Technology (China)

Department of mathmetics @ École Centrale de Lyon (France)

T-Stone Robotics Institute @ The Chinese University of Hong Kong (HKSAR, China)